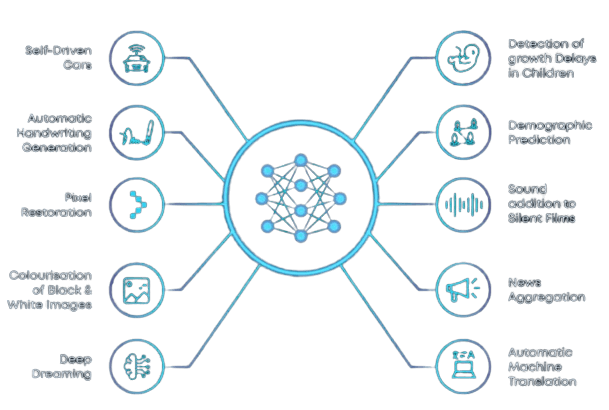

Introduction Deep learning is a rapidly growing subfield of machine learning that uses neural networks with many layers to solve complex problems. With advancements in computing power and the availability of large datasets, deep learning has made significant strides in areas such as image recognition, natural language processing, and speech recognition. However, getting started with deep learning can be overwhelming, especially for beginners. In this article, we will explore the essential steps to help you learn deep learning effectively.

- Establish a strong foundation in linear algebra, calculus, and probability Linear algebra, calculus, and probability are essential prerequisites for understanding deep learning algorithms and techniques. Linear algebra is used to represent data in vector and matrix formats, which are fundamental building blocks of neural networks. Calculus is used to optimize the neural network parameters during training, and probability is used to model the uncertainty and randomness in the data.

- Learn about basic machine learning concepts Before diving into deep learning, it is essential to have a good understanding of basic machine learning concepts such as supervised and unsupervised learning, overfitting, and underfitting. Supervised learning involves training a model to map input data to output labels, while unsupervised learning involves discovering patterns and structure in the data without explicit labels. Overfitting occurs when the model learns the training data too well and fails to generalize to new data, while underfitting occurs when the model is too simple and cannot capture the complexity in the data.

- Familiarize yourself with popular deep learning frameworks There are several deep learning frameworks available, such as TensorFlow, PyTorch, and Keras. These frameworks provide pre-built functions and modules to help you build neural networks more efficiently. In this article, we will use TensorFlow as an example.

To install TensorFlow, you can use pip, a package manager for Python. Open a terminal or command prompt and type the following command:

Copy codepip install tensorflow

Once installed, you can import TensorFlow in Python as follows:

javascriptCopy codeimport tensorflow as tf

- Study neural networks and their components Neural networks are the building blocks of deep learning. They are composed of layers of interconnected nodes called neurons that perform computations on the input data. Neural networks consist of several components, including activation functions, forward propagation, backpropagation, and optimization algorithms like gradient descent.

Activation functions are used to introduce non-linearity into the neural network, allowing it to learn complex patterns in the data. Some commonly used activation functions are sigmoid, tanh, and ReLU.

Forward propagation involves passing the input data through the neural network and computing the output. The output is then compared to the actual labels to compute the loss, which measures the difference between the predicted and actual values.

Backpropagation is used to compute the gradients of the loss with respect to the neural network parameters. These gradients are then used to update the parameters during training, using optimization algorithms such as gradient descent.

Example code for creating a simple neural network in TensorFlow:

pythonCopy codeimport tensorflow as tf

# Define the input and output dimensions

input_dim = 10

output_dim = 1

# Define the layers of the neural network

model = tf.keras.Sequential([

tf.keras.layers.Dense(32, activation='relu', input_dim=input_dim),

tf.keras.layers.Dense(16, activation='relu'),

tf.keras.layers.Dense(output_dim, activation='sigmoid')

])

# Compile the model with a loss function and optimizer

model.compile(loss='binary_crossentropy', optimizer='adam')

# Train the model on some data

x_train = tf.ones((100, input_dim))

y_train = tf.ones((100, output_dim))

model.fit(x_train, y_train, epochs=10)

This code defines a simple neural network with three layers: an input layer,

Once you have selected a dataset, you can begin to preprocess it to ensure that it is in a suitable format for deep learning algorithms. This typically involves steps like normalization, data augmentation, and splitting the data into training, validation, and test sets.

Here is some example code for preprocessing an image dataset using the TensorFlow library:

pythonCopy codeimport tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# Define the paths to the training and validation data

train_dir = 'path/to/training/data'

val_dir = 'path/to/validation/data'

# Define the image dimensions and batch size

img_width, img_height = 224, 224

batch_size = 32

# Define an ImageDataGenerator object to perform data augmentation

train_datagen = ImageDataGenerator(

rescale=1./255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True

)

# Define an ImageDataGenerator object for the validation set

val_datagen = ImageDataGenerator(rescale=1./255)

# Use the flow_from_directory method to create batches of data

train_data = train_datagen.flow_from_directory(

train_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode='binary'

)

val_data = val_datagen.flow_from_directory(

val_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode='binary'

)

In this code, we define the paths to the training and validation data, as well as the dimensions of the images and the batch size. We also define an ImageDataGenerator object to perform data augmentation on the training set and a separate ImageDataGenerator object for the validation set. Finally, we use the flow_from_directory method to create batches of data for training and validation.

Step 5: Dive into specialized neural network architectures

Once you have a good understanding of the basics of neural networks, it’s time to dive into specialized architectures that are designed for specific tasks.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a type of neural network that are designed for image recognition tasks. They work by convolving a set of filters over the input image, extracting features at different levels of abstraction. These features are then passed through a series of fully connected layers to produce a final classification.

Here is some example code for building a simple CNN using the Keras library:

pythonCopy codeimport tensorflow as tf

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Define the input shape and number of classes

input_shape = (224, 224, 3)

num_classes = 10

# Define the model architecture

model = tf.keras.Sequential([

Conv2D(32, (3,3), activation='relu', input_shape=input_shape),

MaxPooling2D((2,2)),

Conv2D(64, (3,3), activation='relu'),

MaxPooling2D((2,2)),

Conv2D(128, (3,3), activation='relu'),

MaxPooling2D((2,2)),

Flatten(),

Dense(128, activation='relu'),

Dense(num_classes, activation='softmax')

])

# Compile the model with an optimizer and loss function

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

In this code, we define the input shape of the images and the number of classes in the dataset. We then define the architecture of the CNN using a sequence of Conv2D and MaxPooling2D layers, followed by two fully connected Dense layers. Finally, we compile the model with an optimizer

Learn about Basic Machine Learning Concepts

Before diving into deep learning, it’s important to have a solid understanding of basic machine learning concepts. Here are a few key concepts to get started with:

Supervised Learning

Supervised learning is a type of machine learning where the algorithm is trained on labeled data. Labeled data consists of input features and corresponding output labels. The goal of supervised learning is to learn a function that maps the input features to the output labels.

Here’s an example of supervised learning:

Suppose we have a dataset of images of handwritten digits and their corresponding labels (0-9). We can use a supervised learning algorithm to train a neural network to predict the correct label for a given image.

Unsupervised Learning

Unsupervised learning is a type of machine learning where the algorithm is trained on unlabeled data. Unlabeled data consists of input features without any corresponding output labels. The goal of unsupervised learning is to learn the underlying structure or patterns in the data.

Here’s an example of unsupervised learning:

Suppose we have a dataset of customer purchase histories. We can use an unsupervised learning algorithm to group customers into clusters based on their purchasing behavior.

Overfitting and Underfitting

Overfitting and underfitting are common problems in machine learning. Overfitting occurs when the model is too complex and captures noise in the training data. Underfitting occurs when the model is too simple and cannot capture the underlying patterns in the data.

Here’s an example of overfitting and underfitting:

Suppose we have a dataset of housing prices with features such as square footage, number of bedrooms, and location. If we train a linear regression model with only one feature (e.g., square footage), the model will underfit the data. If we train a high-degree polynomial regression model with too many features, the model will overfit the data.

Familiarize Yourself with Popular Deep Learning Frameworks

Deep learning frameworks provide pre-built functions and modules to help you build neural networks more efficiently. Two popular deep learning frameworks are TensorFlow and PyTorch.

TensorFlow

TensorFlow is an open-source deep learning framework developed by Google. It allows you to build and train neural networks for a wide range of applications, including image recognition, natural language processing, and time series analysis.

Here’s an example of building a simple neural network using TensorFlow:

pythonCopy codeimport tensorflow as tf

# Define the model architecture

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Train the model

model.fit(train_images, train_labels, epochs=10)

# Evaluate the model

test_loss, test_acc = model.evaluate(test_images, test_labels)

print('Test accuracy:', test_acc)

This code defines a simple neural network with one hidden layer and an output layer. The Dense layers are fully connected layers, and the activation function for the hidden layer is ReLU. The output layer uses a softmax activation function, which outputs a probability distribution over the 10 possible classes. The model is compiled with the Adam optimizer, the sparse categorical crossentropy loss function, and accuracy as the evaluation metric.

PyTorch

PyTorch is another popular open-source deep learning framework developed by Facebook. It provides a dynamic computational graph that allows for more flexible model architectures and easier debugging.

Here’s an example of building a simple neural network using PyTorch:

pythonCopy codeimport torch

import torch.nn as nn

import torch.optim as optim

Overfitting is a common problem in deep learning models, where the model performs well on the training data but poorly on the validation or test data. Regularization techniques can help prevent overfitting and improve model generalization.

One popular regularization technique is L2 regularization, also known as weight decay. It involves adding a penalty term to the loss function that penalizes large weights. This encourages the model to have smaller weight values, which can lead to better generalization. The L2 regularization term is calculated as follows:

java

Copy code

L2 regularization = λ/2 * ||w||^2

where λ is the regularization strength and w is the weight vector.

Another regularization technique is dropout, which randomly drops out some of the neurons during training. This prevents the model from relying too heavily on any one neuron and encourages the model to learn more robust features. Dropout can be implemented using the Dropout layer in Keras:

python

Copy code

from tensorflow.keras.layers import Dropout

model = tf.keras.Sequential([

# …

Dropout(0.2),

# …

])

Here, we added a Dropout layer with a rate of 0.2, meaning that 20% of the neurons will be randomly dropped out during training.

Transfer Learning

Transfer learning is a technique where a pre-trained model is used as a starting point for a new task, instead of training a new model from scratch. This can save time and resources and can often lead to better performance, especially when the new task has limited training data.

One popular pre-trained model for image classification tasks is the VGG16 model, which was trained on the ImageNet dataset. We can use the pre-trained VGG16 model as a feature extractor and add our own classification layer on top. Here’s an example:

python

Copy code

from tensorflow.keras.applications import VGG16

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

# Load pre-trained VGG16 model without classification layer

base_model = VGG16(weights=’imagenet’, include_top=False, input_shape=(224, 224, 3))

# Add our own classification layer on top

x = base_model.output

x = Flatten()(x)

x = Dense(256, activation=’relu’)(x)

predictions = Dense(num_classes, activation=’softmax’)(x)

# Create new model with pre-trained VGG16 as feature extractor and our own classification layer

model = Model(inputs=base_model.input, outputs=predictions)

# Freeze pre-trained layers so they’re not updated during training

for layer in base_model.layers:

layer.trainable = False

# Compile model with Adam optimizer and categorical cross-entropy loss

model.compile(optimizer=Adam(lr=0.001), loss=’categorical_crossentropy’, metrics=[‘accuracy’])

Here, we loaded the pre-trained VGG16 model without its classification layer, added our own classification layer on top, and froze the pre-trained layers so they’re not updated during training. We then compiled the model with the Adam optimizer and categorical cross-entropy loss.

Conclusion

Deep learning is a powerful subfield of machine learning that can be used to solve a wide range of problems, from image classification to natural language processing. To get started with deep learning, it’s important to have a strong foundation in linear algebra, calculus, and probability, and to be familiar with basic machine learning concepts such as supervised and unsupervised learning, overfitting, and underfitting.

Familiarizing yourself with popular deep learning frameworks like TensorFlow or PyTorch can help you build neural networks more efficiently.

In this section, we’ll explore how to use deep learning to solve a specific problem, namely image classification using a convolutional neural network.

Image Classification with Convolutional Neural Networks

Image classification is a common task in computer vision that involves identifying the contents of an image. One approach to solving this problem is to use a convolutional neural network (CNN).

Convolutional Neural Networks

A CNN is a type of neural network that is well-suited for image classification tasks. The basic idea behind a CNN is to use a set of filters to scan an input image, looking for certain features or patterns. Each filter produces a new “feature map” that captures the presence or absence of the feature in different regions of the image.

The filters used in a CNN are typically small (e.g., 3×3 or 5×5 pixels) and slide across the input image in a systematic way, using a technique called “convolution”. The resulting feature maps are then passed through additional layers of the neural network, which learn to combine and interpret the features to make a prediction about the image’s contents.

Building a CNN for Image Classification

Let’s say we want to build a CNN to classify images of cats and dogs. Here are the steps we might follow:

Prepare the data: We’ll need a dataset of labeled images to train our model. There are many publicly available datasets for this task, such as the CIFAR-10 dataset which contains 60,000 32×32 pixel color images in 10 classes, with 6,000 images per class. We’ll split this dataset into training and testing sets.

python

Copy code

import tensorflow as tf

from tensorflow.keras.datasets import cifar10

(train_images, train_labels), (test_images, test_labels) = cifar10.load_data()

# Normalize pixel values between 0 and 1

train_images, test_images = train_images / 255.0, test_images / 255.0

Define the model: We’ll use the Keras API, which is a high-level neural network API that makes it easy to build and train models. We’ll define a simple CNN architecture with two convolutional layers, followed by max pooling and dropout layers, and ending with a dense layer for classification.

python

Copy code

from tensorflow.keras import layers, models

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation=’relu’, input_shape=(32, 32, 3)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation=’relu’),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation=’relu’),

layers.Flatten(),

layers.Dropout(0.5),

layers.Dense(64, activation=’relu’),

layers.Dense(10)

])

model.summary()

Compile the model: Before we can train the model, we need to specify the optimizer, loss function, and evaluation metric. We’ll use the Adam optimizer, the sparse categorical cross-entropy loss function (since our labels are integers), and the accuracy metric.

python

Copy code

model.compile(optimizer=’adam’,

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[‘accuracy’])

Train the model: We’ll train the model on the training data using the fit method. We’ll specify the number of epochs (passes through the dataset), the batch size, and the validation data (to monitor the model’s performance on unseen data).

python

Copy code

history = model.fit(train_images, train_labels, epochs=10,

Scam